Morgan Badoud

Senior Manager Digital Assurance

PwC Switzerland

AI: not new, but immensely more powerful

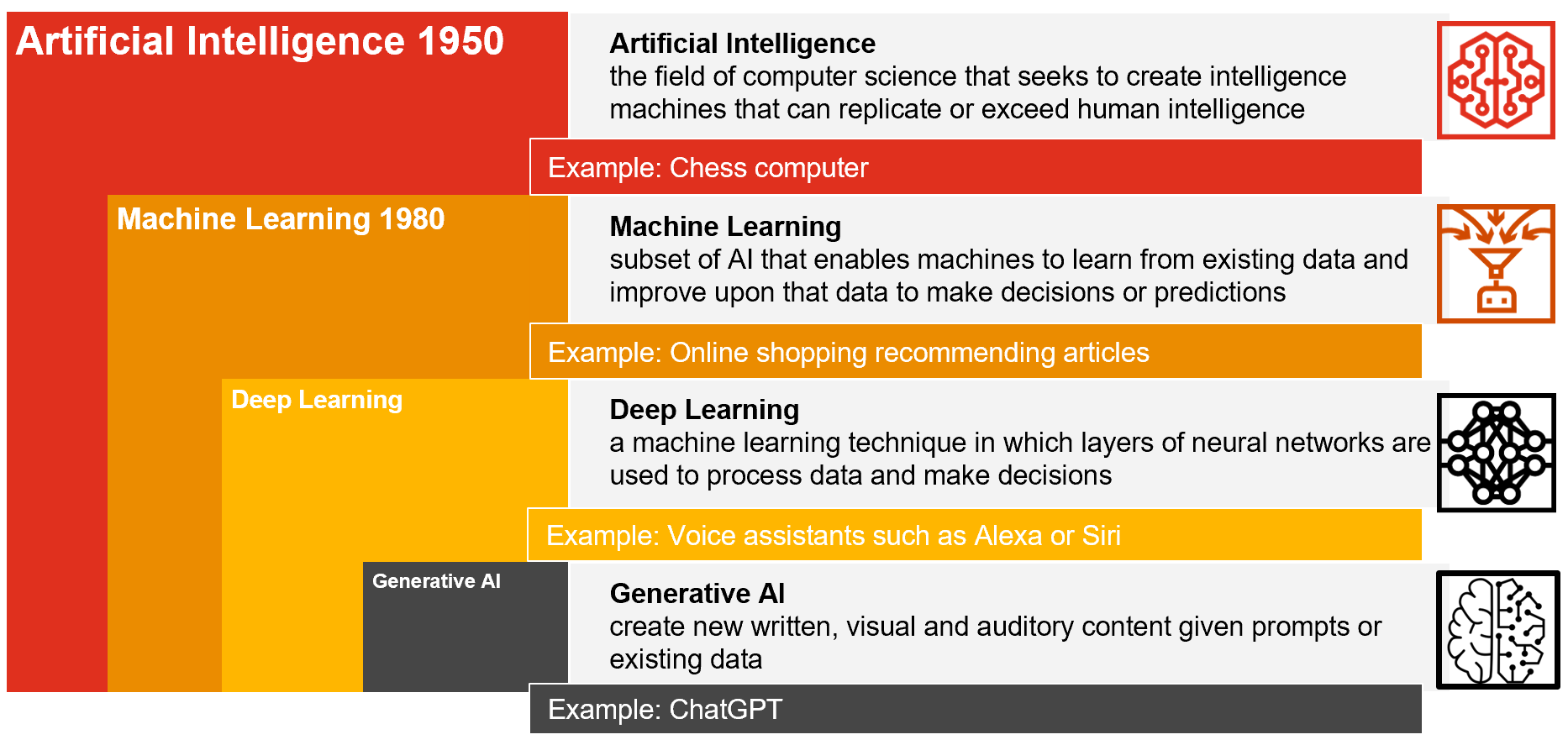

Artificial intelligence (AI) may be the buzzword of the moment, but it is not new at all. AI has been echoing through the corridors of tech companies for decades. Recently, however, it has undergone monumental shifts. From its humble beginnings to the era of deep learning, AI has moved from simply processing information to generating entirely new content. Among its most recent advancements stands generative AI.

The history of generative AI (Source: PwC)

Generative AI is an offshoot of the broader spectrum of deep learning. Specifically, it’s trained to create, enrich, and analyse unstructured data such as text, voice, and images. In business, however, this is precisely the type of data that is critical to understanding the ever-changing needs of stakeholders. Rather than simply analysing and interpreting, as was the norm with traditional AI models, generative AI creates new content based on patterns it deciphers from its training data. This significant difference opens up a wealth of possibilities. Imagine AI not only sifting through existing art, music, or text, but creating new masterpieces and even entire virtual worlds. The huge potential of generative AI has important implications for businesses.

The phenomenal rise of generative AI in business…

In December 2022, the world sat up and took notice of the meteoric rise of ChatGPT. Developed by OpenAI, ChatGPT revolutionised natural language processing and achieved user adoption at a rate that left titans like Netflix, Facebook, and Instagram far behind. With ChatGPT reaching a million users in just 5 days, compared to 10 months for Facebook and 3.5 years for Netflix, the message was clear: demand for advanced AI technologies is skyrocketing.

This surge in demand isn’t just a momentary trend. It signals a shift in the global business landscape. From production lines to finance departments, from IT corridors to marketing meetings, AI is the new thing, driving innovation, new business models, and investors alike. What’s more, companies aren’t just looking to adopt generative AI – they want to create their own bespoke AI models and tailor the solutions to their unique needs.

…comes with risks and trust issues

However, as with any powerful tool, there are inherent risks. Companies must balance speed and trust when adopting generative AI, ensuring a risk-based approach to gain a competitive edge and maintain stakeholder trust. Effective risk management is vital for leveraging the full benefits of this transformative technology.

Two of the main concerns with AI, particularly generative AI, are data protection and intellectual property (IP) challenges. High-end models such as ChatGPT are often hosted on cloud services, leading to potential data vulnerabilities. In addition, the use of generative models can infringe on the intellectual property of others, potentially leading to legal issues. Another important concern is the data on which the model is trained. If it’s trained on biased data, its outputs may reflect the same biases. It’s therefore critical for organisations to carefully examine their AI’s underlying data sets to ensure fairness, neutrality, and relevance.

Furthermore, while companies are under pressure to collect vast amounts of data, the key challenge is how to use it effectively. There is a risk in relying too much on generated data, especially without critical evaluation. With their engaging writing styles, chatbots can sometimes provide supposedly correct information that’s in fact biased or simply wrong. Relying on such data can lead to ill-informed decisions, potentially damaging a company’s service quality and reputation. Dealing with the AI-generated information landscape requires caution, curiosity, and a discerning eye.

One underestimated concern regards the new security treats created by the usage of AI driven tools. AI is not only used to improve attackers capabilities, but creates new categories of risks, that need to be specially addressed. Current continuous discoveries in AI weaknesses are showing the fragility of AI tools.

The main risks associated with generative AI (Source: PwC)

How to address the risks associated with AI? Start with asking the right questions.

As artificial intelligence becomes an integral part of business processes, understanding and managing the associated risks is paramount. Every organisation, regardless of industry or size, needs to develop a proactive approach to these risks. It starts with asking the right questions:

1. Strategic alignment:

Does your company’s AI initiative align seamlessly with your strategic goals and core values?

Are there any potential misalignments or conflicts that need to be addressed?

2. Risk assessment:

Have you a clear overview of the current usage of AI based tools embedded in your business processes?

Have you comprehensively identified and subsequently prioritised the primary risks tied to your AI initiatives?

What preventive measures have been taken to mitigate risks?

3. Audit and assurance:

In the framework of external audits, how effectively have AI-related processes and controls been incorporated?

Does your audit process require specialised techniques when examining AI-related financial transactions or datasets?

Taking the time to reflect on these questions not only ensures that AI aligns with your business objectives but also guarantees that its implementation is smooth, secure, and strategic. As AI continues to advance rapidly, businesses must remain vigilant and ensure that their internal audit and risk functions are well-equipped to handle the challenges and promises of this disruptive technology.

Towards responsible AI implementation

Against this backdrop, the discourse on responsible AI development and implementation is gaining momentum. Ethical aspects of generative AI such as potential bias, privacy, and the implications of deepfakes are under intense scrutiny. Frameworks such as AIC4 and ISO/IEC23894 are emerging as important guidelines for responsible AI development. It is important to note that there are currently widespread initiatives aimed at regulating the usage of AI as the EU Act on AI or the US Blueprint for an AI Bill of Rights.

An aspect that is sometimes overlooked is how internal audit and risk functions should evolve to accommodate AI. These functions must comprehend and align with the company’s AI goals as well as strategically audit core datasets to ensure compliance and data security. A deep-rooted understanding of the intricacies of generative AI systems is indispensable. Moreover, a rigorous evaluation of the AI model outputs is vital, ensuring that they stand up to the benchmarks of fairness, accuracy, and relevance. Furthermore, fostering a culture of intellectual curiosity becomes paramount. Generative AI should be seen as a tool, not as a replacement of human intuition and expertise.

The top three actions to get the best out of AI

To use AI responsibly and realise its full potential, business leaders need to take three key actions:

- Ensure a governance structure and an enterprise-wide AI risk management framework.

- Ensure that board members have a good understanding of AI technology, its potential and its limitations.

- Consider establishing a dedicated committee or subcommittee within the board to focus on AI governance and ethics.

The AI landscape is dynamic, with as many opportunities as challenges. While businesses are excited about the potential benefits, it’s important to approach AI with caution and to keep ethics and human input at the forefront. AI systems need to be built on solid ethical principles, as they will affect everyone who uses them.

Generative AI offers remarkable opportunities to increase efficiency and competence, but its introduction must be accompanied by a culture of intellectual curiosity and a heightened awareness of the inherent risks. We must remember that it is a tool, not a definitive guide, and emphasise the essential balance between AI’s potential and human judgement.

#social#

Building trust in AI

If you would like to learn more on how to mitigate risks while implementing responsible AI-supported solutions in your company, please feel free to reach out to us. We help you along all stages: From strategy (definition of the use case) to execution and finally, the monitoring of the AI.

Contact us